The Problem: Getting Real-time Data into Kafka

Have you ever tried to stream Wikipedia changes or other real-time Server-Sent Events (SSE) updates into Kafka? If you have, you probably wrote custom Kafka producer and consumer code to handle these events, managed reconnections, and built your own error handling.

There had to be a better way.

Introducing the SSE Kafka Connector

I’m excited to share my new open-source project: a generic Kafka Connect connector for Server-Sent Events. It lets you stream data from any SSE endpoint straight into Kafka topics—no custom code required.

Github Repository

What Can You Do With It?

Stream Wikipedia Changes

{

"sse.url": "https://stream.wikimedia.org/v2/stream/recentchange",

"data.format": "json",

"topic.strategy": "static",

"kafka.topic": "wiki-changes"

}

Watch page edits, detect vandalism, or track trends across Wikipedia in real-time.

Monitor GitHub Events

{

"sse.url": "https://github-events-proxy.example.com/events",

"sse.headers": "Authorization:Bearer YOUR_TOKEN",

"topic.strategy": "field",

"topic.field": "$.repository.name"

}

Get notified about new issues, commits, and PRs as they happen.

Track Financial Updates

{

"sse.url": "https://market-data.example.com/stocks",

"json.key.field": "$.symbol",

"json.value.fields": "$.price,$.change",

"kafka.topic": "stock-updates"

}

Stream market data for real-time analytics and alerts.

See It in Action

# Start the Docker environment

docker-compose up -d

# Deploy the connector with Wikimedia config

curl -X POST -H "Content-Type: application/json" \

--data @config/json/wikimedia-sse-connector.json \

http://localhost:8083/connectors

# Watch the data flow into Kafka

docker exec kafka kafka-console-consumer \

--bootstrap-server kafka:9092 \

--topic wikimedia-changes --from-beginning

How It Works

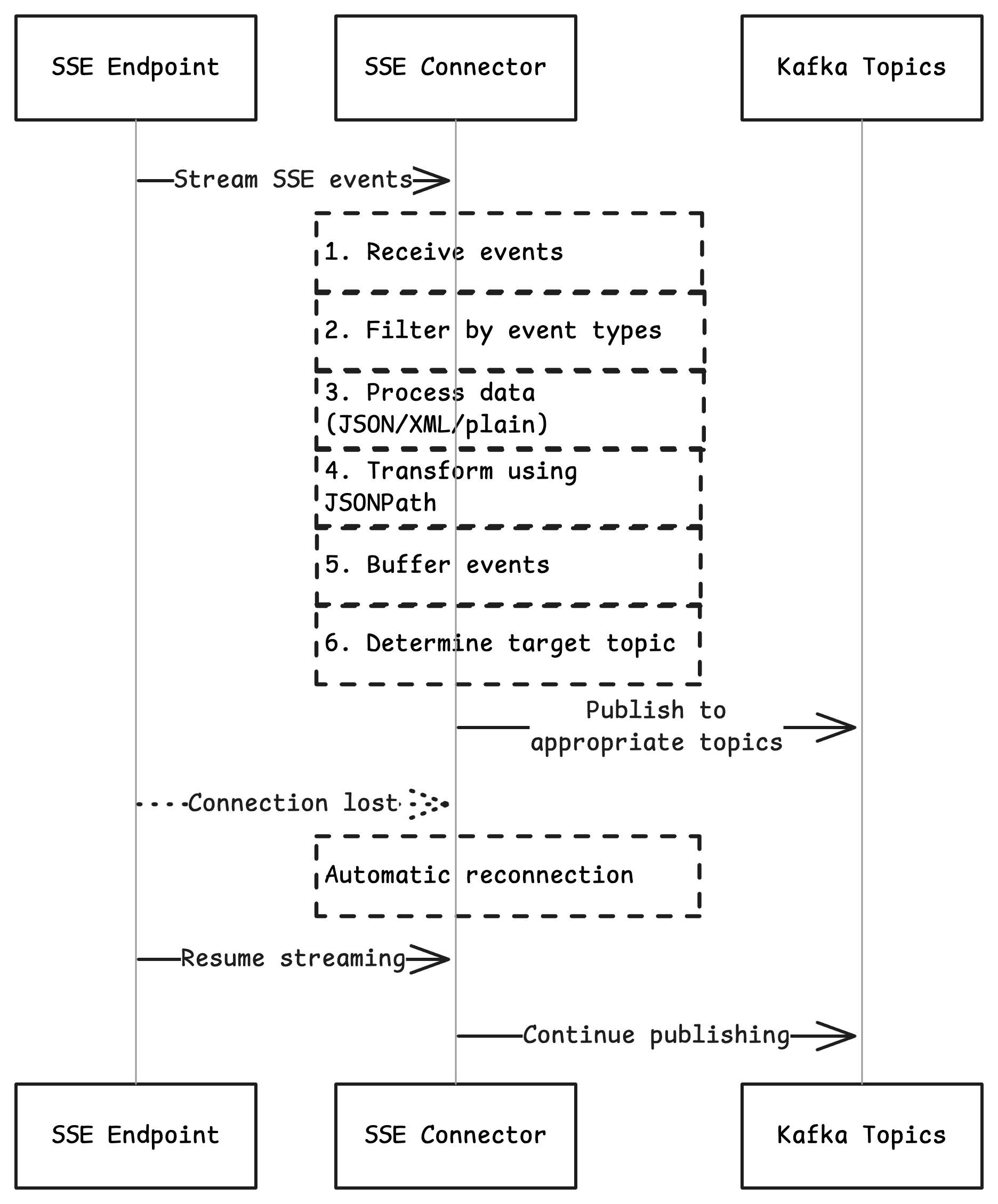

The connector:

- Establishes a connection to any SSE endpoint

- Filters events based on what you’re interested in

- Processes the data (JSON, XML, plain text)

- Routes events to the right Kafka topics

Why I Built This

I wanted a simple, reusable way to integrate SSE streams with Kafka without reinventing the wheel each time. This connector abstracts away the complexity, letting you focus on building applications instead of plumbing. Also I wanted to tryout real world event streaming usecases with Kafka Connect especially the wikimedia stream which is a great source of real time data.

Try It Today

Ready to simplify your event streaming? Check out the GitHub repository and give it a star if you find it useful!

Questions or ideas? Open an issue or contribute to the project. I’d love to hear how you’re using it.

Stay Connected

- Blog: https://joel-hanson.github.io/

- GitHub: Joel-hanson

- LinkedIn: Joel-hanson

For more Kafka Connect tips and open-source tools, follow the blog series and star the repository.